Artificial intelligence has already transformed how research is carried out in life sciences, but the real challenge comes when we try to scale these models beyond the controlled environment of the lab and into commercial manufacturing. For pharmaceutical companies, where safety, compliance, and efficiency are everything, the journey from a prototype AI model to a validated, production-ready system is often slow, risky, and expensive. Model Context Protocol, or MCP, is emerging as one of the most promising ways to make this transition smoother. MCP is essentially a lightweight open standard that allows AI agents to connect seamlessly with enterprise systems such as LIMS, MES, SCADA, and ERP without the need for endless custom connectors. By standardizing how models interact with data, tools, and workflows, MCP creates a common layer that reduces integration effort, maintains traceability, and enforces governance. For industries like pharma manufacturing, this can be the difference between experimental AI pilots that never leave the lab and production-ready systems that generate measurable value.

The importance of this transition cannot be overstated. In a heavily regulated environment such as pharma, AI is not just another IT tool; it has the potential to directly influence product quality, patient safety, and compliance with global health authorities. A model that works well in R&D can still fail during manufacturing if it cannot integrate with real-time process data, scale across multiple production sites, or survive regulatory audits. Without clear standards, most organizations end up building one-off connectors and manual validation processes for every model. This creates silos, slows deployment, and increases risks. MCP offers a way out of this bottleneck by acting as a universal “socket” for AI models, allowing them to plug into existing systems in a predictable and auditable manner.

MCP for Scaling AI Models from Research to Manufacturing

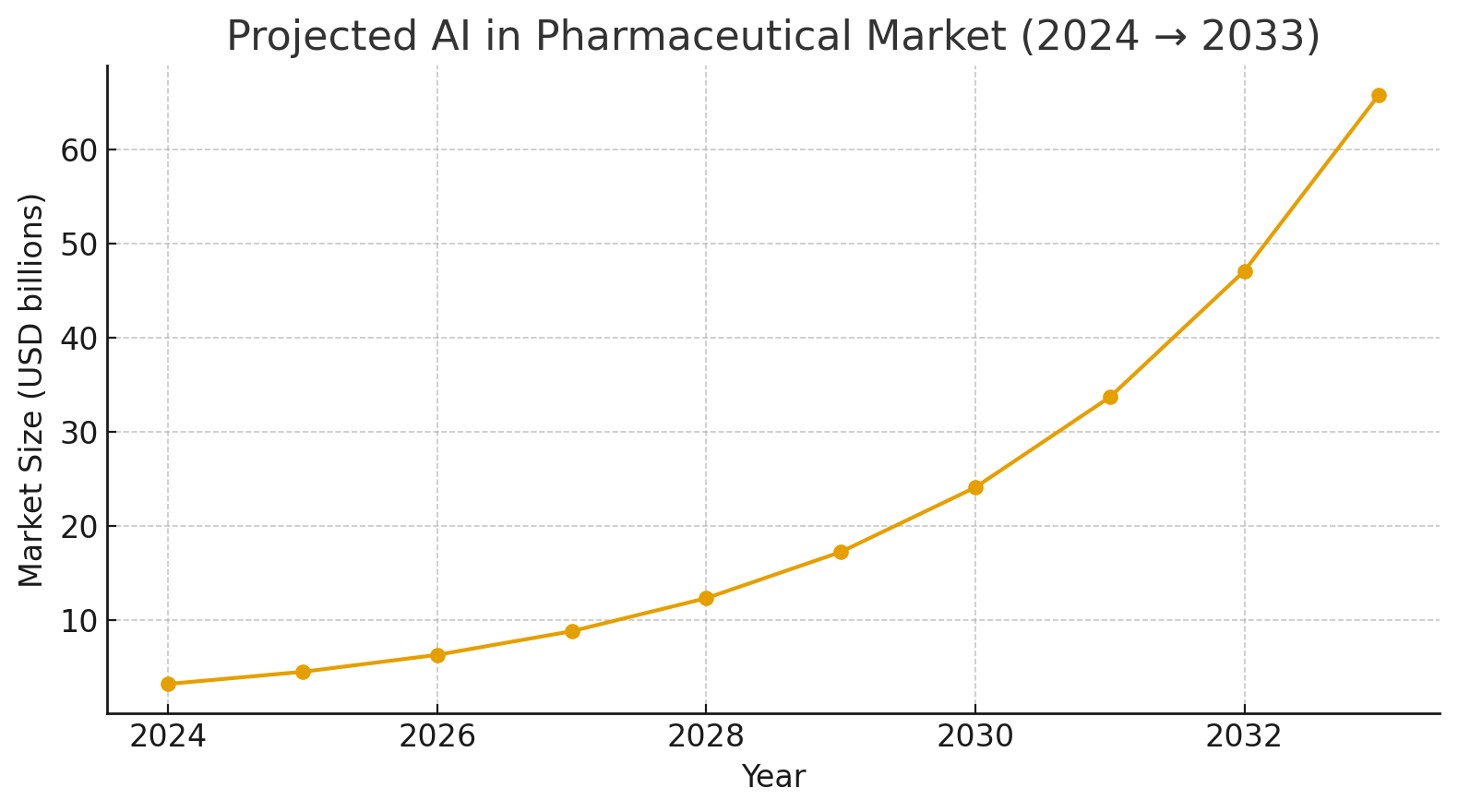

To understand why MCP is so relevant now, we should also look at the broader AI adoption trends in pharma. According to Stanford’s AI Index, nearly 78% of organizations were already using some form of AI by 2024, showing that enterprises are no longer experimenting but actively deploying these technologies. In the pharmaceutical sector specifically, the market size for AI is projected to grow from about USD 3.24 billion in 2024 to nearly USD 65.8 billion by 2033. This explosive growth, with one of the highest CAGRs in technology investment, explains why vendors, regulators, and pharma leaders are paying attention to robust deployment frameworks. A separate set of reports also highlights how AI in pharmaceutical manufacturing alone is becoming a multi-billion-dollar market segment, with estimates ranging from USD 0.64 to 1.74 billion in 2024 and similar double-digit growth rates over the next decade. The sheer speed of adoption makes the need for standardization and efficiency all the more pressing.

Now, what exactly is MCP, and why is it suited to this environment? At its core, MCP defines how AI models and agents communicate with external systems. Instead of relying on custom scripts or proprietary APIs, MCP provides a small set of universal APIs that handle requests for data, tool usage, context sharing, and secure credential exchange. Think of it as a shared language between models and enterprise infrastructure. For pharma, where dozens of systems coexist across research, development, and manufacturing, this standardized language allows teams to avoid duplicating integration work. Build an MCP adapter for a lab instrument or an MES once, and any MCP-compliant model can use it.

Practical Benefits of MCP in Scaling AI

The benefits of this standardization show up quickly when we analyze the typical pain points of scaling AI. Integration overhead is one of the most common. Without MCP, every new model requires engineers to create bespoke connectors to multiple databases and tools. With MCP, one reusable adapter serves all models, cutting integration times from months to days. Another challenge is traceability. Regulators routinely ask, “How did this decision come about?” With ad-hoc systems, tracing which dataset, parameter, and version of a model led to a particular manufacturing decision is almost impossible. MCP solves this by capturing context objects and interaction logs in a structured, predictable way, making it easier to reconstruct the chain of evidence.

Security is another major concern in pharma, where sensitive patient data, lab results, and proprietary manufacturing parameters must remain protected. MCP’s design separates identity from capability. That means models don’t keep long-lived credentials or database passwords. Instead, access happens through short-lived tokens and predefined policies. This is much easier to audit and significantly reduces the attack surface. At the same time, MCP allows organizations to profile how a model is supposed to behave. For instance, you can define what type of input it expects, what outputs are valid, and what ranges are acceptable. These profiles can then be tested automatically, reducing the manual validation burden required under GxP and 21 CFR Part 11 compliance.

The advantages are not just theoretical. MCP’s standard telemetry also makes it easier for manufacturing teams to monitor models in real time. Instead of having to stitch together logs from different tools, you can get uniform metrics on latency, data size, and errors across all models. This unified observability improves response times when issues arise and ensures consistent SLAs. MCP also enables safe deployment strategies, such as canary releases, where a new model version only handles a small fraction of production data until it proves stable, after which it can be scaled up. This staged rollout drastically reduces the risks of deploying AI into critical manufacturing lines.

For pharmaceutical manufacturing, these technical features translate into very practical outcomes. Faster validation cycles mean that models can move from R&D to production more quickly, giving companies a competitive edge in time-to-market. Traceability means that when regulators audit a site, the company can produce detailed logs that explain every AI-assisted decision, reducing the chance of compliance penalties. Standardized telemetry reduces downtime, as anomalies can be detected and addressed faster. And change management becomes smoother, since MCP’s predictable architecture fits naturally into existing quality management systems and electronic change control processes. Importantly, the focus on least privilege and data minimization helps companies align with data protection standards like HIPAA when models touch clinical data.

Industry Momentum and Adoption of MCP

The industry momentum behind MCP also strengthens its credibility. Anthropic introduced MCP as an open standard to make AI agents more secure and interoperable, and since then, enterprise players such as IBM and Google Cloud have published material highlighting its importance. Microsoft and other large technology vendors have also supported the idea of agent standards that reduce bespoke plumbing and allow easier collaboration across multi-agent systems. In life sciences, consortiums are already forming around federated data sharing and AI-driven discovery, and MCP fits naturally into these projects by providing a common interface for collaboration.

Architecture patterns in pharma manufacturing illustrate how MCP can be applied in real-world settings. One pattern is the adapter model, where MCP-compliant servers expose systems like LIMS, MES, and SCADA as services that models can consume. Another is the use of MCP gateways with policy engines that enforce role-based access, audit trails, and throttle rules before allowing models to interact with production systems. Validation harnesses built around MCP profiles allow automated testing against historical and simulated data, providing repeatable evidence for regulators. Observability stacks unify logs and metrics into dashboards, while deployment patterns like canary releases give IT and QA teams more control over risk. Together, these patterns create a blueprint for integrating AI into manufacturing without compromising compliance.

Of course, no technology is a silver bullet, and it is important to acknowledge MCP’s limitations. It does not guarantee model quality, that still depends on training data and domain expertise. It also requires upfront engineering effort to build adapters and governance pipelines, which means organizations must be willing to invest before reaping long-term savings. And as with any cross-functional initiative, cultural alignment between R&D, IT, and QA remains critical. Vendor lock-in is another risk if different providers interpret the standard differently, so companies must evaluate implementations carefully.

Why MCP Delivers Strong ROI in Pharma?

Even so, the cost-benefit ratio is compelling. While the initial setup involves effort, subsequent integrations become much faster and cheaper. Companies can reduce integration time from several weeks per model to just a few days. The benefits include better audit readiness, lower security risk, faster validation, and easier observability. In practice, this enables use cases such as predictive maintenance of equipment, real-time anomaly detection on process variables, automated review of batch release data, yield optimization through combined lab and process analytics, and supplier risk scoring using secure data access. Each of these applications contributes to reduced downtime, higher quality, or better compliance, making the business case strong.

Getting executive buy-in usually requires showing measurable results. MCP adoption can be framed in terms of risk reduction, since it makes audits smoother and validation cycles shorter. It can also be presented as a speed-to-market enabler, since integration timelines shrink dramatically. Executives will also appreciate the improved security posture from short-lived credentials and gateways. And perhaps most importantly, MCP offers flexibility: it is an open standard that reduces dependence on any single vendor, allowing organizations to switch tools without rebuilding everything from scratch.

A practical way to start is with a pilot program. Within 90 days, a cross-functional team can identify a low-risk use case such as predictive maintenance, build the necessary adapters, set up policies, run validation tests, and deploy a model in canary mode. By monitoring telemetry and process KPIs during the pilot, the organization can generate concrete evidence of MCP’s value. If successful, this pilot can then be scaled across sites and expanded to more critical processes.

Conclusion

In conclusion, MCP is not about replacing scientific rigor or regulatory discipline; it is about making them easier to achieve at scale. In a world where pharma companies are expected to adopt AI quickly but without compromising compliance, MCP provides the missing standard that makes large-scale deployment practical. It reduces duplication of work, improves traceability, enforces security, and creates a foundation for governed AI in manufacturing. Given the rapid growth of AI in pharma and the clear interest of major technology players in standardizing agent interactions, organizations that adopt MCP now will be far better positioned to scale AI efficiently and responsibly.

Most people in pharma and life sciences ask similar questions when they first hear about MCP. They want to know if it is secure enough for regulated data. The answer is yes — provided that the implementation uses encryption, short-lived credentials, and strict access controls. They ask if regulators will accept decisions logged through MCP, and the answer is that regulators care about traceability and reproducibility, both of which MCP supports. They wonder how much time MCP really saves, and while the savings vary, mature organizations often see integration time drop from weeks to days. They also ask which vendors support MCP, and currently, several leading tech companies and cloud providers are either backing the standard or offering MCP-style implementations. Finally, they want to know where to begin, and the recommendation is clear: form a pilot team with R&D, IT, and QA to ensure alignment from the start.

MCP may not be a magic wand, but it is one of the most practical tools available today to help pharma companies take AI out of the lab and into the production line. It answers the industry’s most pressing needs: speed, security, traceability, and compliance, and sets the stage for a future where AI is not just an experiment but a core part of pharmaceutical manufacturing.

Most frequently asked questions related to the subject

Q1 — Is MCP secure enough for regulated data (clinical, patient, batch records)?

Yes — MCP itself is a protocol. Security depends on implementation: use short-lived credentials, gateway policy engines, encryption, and role-based access. Organizations have built MCP-style adapters for healthcare that align with HIPAA and 21 CFR Part 11 requirements.

Q2 — Will regulators accept model decisions logged via MCP?

Regulators care about traceability, validation, and reproducibility. MCP’s standardized context objects and logs help produce the required audit trail; however, you still must map those logs into your validation and DHF artifacts.

Q3 — How much time does MCP save when integrating a new model?

Savings depend on maturity: after building adapters and governance once, subsequent integrations can drop from several weeks of connector work to days. The real saving is in repeatability and reduced audit rework.

Q4 — Which vendors support MCP today?

Several platform vendors and cloud providers have public materials on MCP and related agent standards (Anthropic, IBM, and major cloud providers). Large vendors and consortia are actively exploring MCP or similar specs.

Q5 — Where do we start, IT, QA, or R&D?

All three. Start with a cross-functional pilot team: R&D provides the model, IT builds adapters and a gateway, and QA defines validation tests. This alignment is essential for success.