| tl;dr: MCP (Model Context Protocol) is becoming the backbone of AI–digital twin integration in pharma and life sciences. It links real-time data from manufacturing equipment and quality systems to AI models, creating predictive insights for maintenance, process optimization, and quality control. This integration reduces unplanned downtime by up to 60%, accelerates batch release by 50%, and improves deviation closure speed by 70%. As Pharma 4.0 investments grow beyond $18.5 billion, MCP-driven digital twins are setting a new standard for compliance, traceability, and operational excellence. |

What is MCP, Digital Twins & AI: Definitions & Industry Context

Before diving into MCP (Model Context Protocol), predictive maintenance, and quality control in the pharmaceutical industry, it’s helpful to clearly define terms and then examine how they overlap.

Key Definitions

- Digital Twin: A virtual model or replica of a physical asset, system, process, or facility that mirrors its real‐time condition, behavior, and environment using sensor data, etc., allowing simulation, prediction, and optimization.

- AI / ML: Artificial Intelligence and Machine Learning – techniques including statistical models, deep learning, anomaly detection, reinforcement learning, etc., used for pattern recognition, forecasting, anomaly detection decision support.

- Predictive Maintenance: Using data and analytics to forecast equipment failures or process deviations before they happen, enabling maintenance actions only when needed rather than on fixed schedules or after failures.

- Quality Control (QC): Ensuring that products meet required specifications consistently; in pharma, this means tight controls on purity, stability, dosage, safety, etc., and ensuring process consistency, regulatory compliance, etc.

- Model Context Protocol (MCP): A relatively new open standard (introduced by Anthropic in late 2024) for interoperable integration of AI models (especially LLMs / agents) with external data sources, tools, and services. It provides a standardized interface so that AI can access data, tools, services, execute actions, etc., in a controlled, secure, and context‐aware way.

Why These Matter in Pharma/Life Sciences

- Pharma manufacturing requires extreme consistency, traceability, and regulatory compliance (e.g., GMP, DSCSA in the supply chain, etc.).

- Downtime or failures are extremely expensive; batch failures can lead to massive losses, recalls, and reputational damage.

- Processes are complex (bioreactors, fill/finish, purification, etc.), often continuous or semi‐continuous, with many critical quality attributes (CQAs) and critical process parameters (CPPs).

- Because of regulatory oversight, all process changes, data, deviations, and corrective actions must be documented with audit trails.

Digital Twins + AI help in simulating, monitoring, predicting, and optimizing; MCP helps in integrating AI + tools + external data in a standard way.

MCP: What Is It, How It Works & Why It’s Emerging Now

MCP (Model Context Protocol) is new (announced around November 2024). Some of its features (and limitations to date) are:

- Open-source standard designed to enable AI agents/models to connect to external data sources, tools, files, APIs, etc., in a standardized, secure way.

- Provides servers & clients: MCP servers expose resources (data or tools); MCP clients (AI agents/models) can request those resources. There’s authentication, access control, and metadata/context tagging.

- Real‐time and historical data access is possible. It supports streaming or snapshot data depending on the resource. It hopefully avoids needing separate bespoke integrations for each data source and AI configuration.

- It is especially relevant where multiple AI models or agents may collaborate, or where AI needs to draw on large, varied data sets (e.g., sensors + batch records + QC analytics + environmental data, etc.) under regulatory or security constraints.

Emerging uses (outside pharma or partially in pharma) include:

- In academic manufacturing/lab scale systems, e.g., “Beyond Formal Semantics for Capabilities and Skills: Model Context Protocol in Manufacturing” (Silva et al., 2025), a lab‐scale manufacturing system is considered. They show how MCP helps LLM‐based agents plan and invoke functions via MCP, with constraint handling.

- In medical concept standardization (mapping medical terms to OMOP CDM), using MCP to connect LLMs to real dictionaries / APIs to improve mapping, reduce hallucinations, etc.

So MCP is relatively fresh but showing promise for high-stakes applications needing accuracy, auditable behavior, security, and contextually rich data.

Market & Industry Trends: Digital Twins, AI, Predictive Maintenance in Pharma

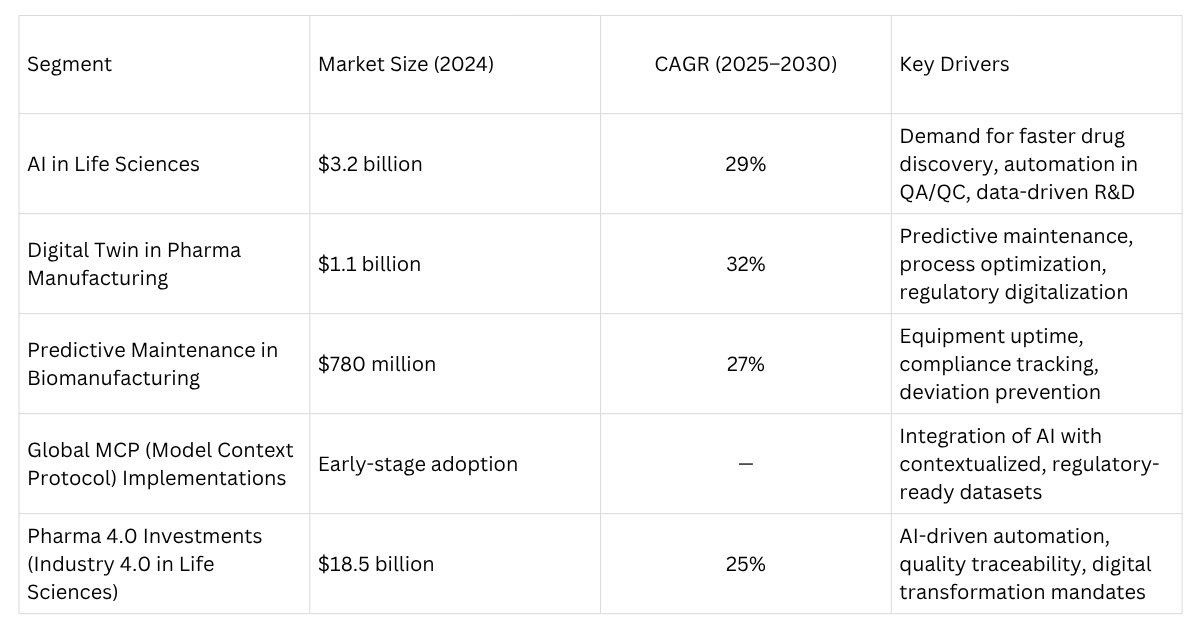

To understand how big the opportunity is, here are recent market numbers, growth rates, use cases, etc.

These numbers show strong growth and room for enhanced efficiency. But successful implementation requires robust, interoperable, secure integration between multiple systems, tools, and AI models. That’s where MCP can play a significant role.

How MCP Enables Better Integration: Roles & Mechanisms in Pharma Use Cases

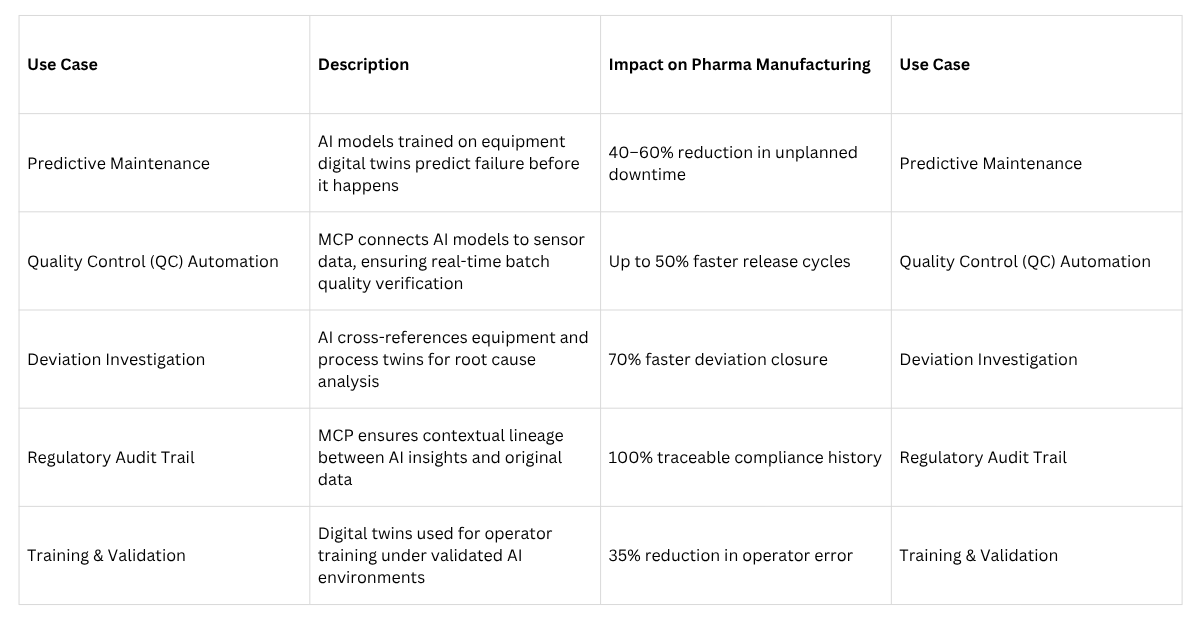

Now, let’s drill down on how MCP specifically contributes to integrating AI with digital twins for predictive maintenance and quality control in pharma / life sciences. I break this into several themes:

- Data / Context Integration & Interoperability

- Real‐Time Monitoring, Anomaly Detection & Predictive Maintenance

- Quality Control, Deviation Detection & Regulatory Requirements

- Security, Auditability & Compliance

- Scalability, Reuse, and Lifecycle Management

- Challenges & Limitations

1. Data / Context Integration & Interoperability

One of the biggest barriers in pharma is fragmented data: equipment sensors, lab systems, batch records, environmental sensors, manual logs, QC / analytical, ERP, and maintenance logs. These often live in silos; they vary in format, update frequency, and some are structured, some not.

- MCP’s Role: acts as a protocol/interface to expose such data sources or tools (e.g., sensor streams, historical batch data, QC lab system, maintenance logs) in a standardized way. AI models (or digital twins) act as clients, requesting data or triggering actions. Because MCP is standardized, new data sources/tools can be plugged in with less custom integration effort.

- Context Tagging and Metadata: For predictive and QC use, knowing when, how, under what conditions, for instance, what temperature, humidity, batch import, and historical equipment status. MCP supports metadata and context tagging (e.g., timestamp, location, environmental parameters) to ensure that when data is passed, the downstream AI/digital twin has the context; without that, predictions or anomaly detection may be inaccurate.

- Use of “Resource Pools” / Tool Exposure: MCP may allow not only data but tools/functions to be exposed, for example, a function to compute vibration analysis from raw accelerometer data, or to run statistical process control chart evaluation. So digital twin + AI can call these as-needed rather than rebuilding each tool in‐house.

Example: The case study “Model Context Protocol (MCP) Repairs” (a pharma supply chain / distributor) integrated IoT data + resource pools + audit APIs to detect inventory anomalies. They used MCP to access real-time data. The improvements included:

- Inventory accuracy up to 99.8% (from ~92%)

- Faster audit compliance by ~40%

- Zero temperature excursions in high-value biologics storage

- 30% fewer manual reconciliation efforts

So this shows how MCP helps integrate data from sensors, external services, audit tools, etc.

2. Enabling Real‐Time Monitoring, Anomaly Detection & Predictive Maintenance

Once data is accessible, predictable via MCP, digital twins + AI can deliver predictive maintenance. Here’s the workflow:

- Setup: Digital twin models replicate critical equipment/processes (e.g., bioreactors, lyophilizers, filling machines). Sensors feed real‐time data (vibration, temperature, pressure, flow, etc.). Historical failure / maintenance data is also present.

- AI models are trained (supervised, unsupervised, possibly hybrid) to detect early signs of failure, drift, and anomalies. They may use ML, deep learning, and root cause analysis.

- MCP facilitates this by exposing necessary data (sensor streams, environmental context, maintenance history) and tools (anomaly detection functions, domain-specific calculators, thresholding, etc.), in a way that’s standardized so the AI models can get what they need without custom connectors every time. Also allows the digital twin to simulate what-if scenarios (e.g., what if temperature drift continues, what effect on product stability).

- Statistical & Market Evidence: With digital twin + predictive maintenance + proper contextual data, pharma/manufacturing companies report reductions of unplanned downtime by 20-25% (McKinsey-type estimates).

- Also, in a broader manufacturing predictive maintenance/digital twin market, forecasts show CAGR ~32.6% for the segment from 2025–2030, rising from ~USD 6.9B in 2024 to ~USD 40.2B in 2030. This signals increasing adoption.

- Example: In Singapore, Cambridge + A*STAR developed a digital twin platform (using AI + real-time plant data) to enhance fault detection, system monitoring, predictive maintenance in pharmaceutical manufacturing.

Thus, MCP supports the data and tool connectivity needed to make predictive maintenance operational and robust.

3. Quality Control, Deviation Detection & Regulatory Requirements

Quality in pharma is non‐negotiable. Digital twin + AI brings advanced capability for QC, but only if the context and traceability are correct. Here is what MCP helps:

- Deviation Detection: Real-time measurement / sensor data + digital twin predictions allow detection of drift in process parameters (e.g., temperature, pH, flow, etc.). AI models may detect subtle drift before it leads to out-of-spec batches. MCP enables these various data streams, plus process metadata, to be available for analysis.

- Simulation of “what if” Scenarios: Digital twins can simulate changes (e.g., new supplier, different environmental conditions, or scaling up production) to see if CQAs might be violated. AI models need access to context: material properties, environmental conditions, historical batch variation, etc., often scattered across multiple systems. MCP aids access to all.

- Regulatory Traceability & Audit Trails: Pharma regulatory agencies (like FDA, EMA, etc.) require documented proof of process controls, deviations, corrective actions, stability, etc. Using MCP means interactions between AI / digital twin and tools/data sources are standardized, logged, with metadata. For example, functions exposed via MCP can log inputs, outputs, timestamps, tools used, etc. This is helpful for audit, compliance.

- Quality by Design (QbD), Continuous Manufacturing: The push in pharma is aggressively toward continuous manufacturing and QbD; in those contexts, real-time quality monitoring, feedback control, and predictive maintenance are essential parts. Digital twins + AI help enforce QbD, but integration (including data flows) must be robust, standardized, and another domain where MCP helps.

- Evidence from Studies: For example, Digital Twins in Pharma Manufacturing reviews suggest digital twins reduce compliance risks by up to 40%, and help reduce production downtime in the 20-25% range.

- Also, the healthcare digital twin market (which includes quality control, trial modelling, etc.) is growing at high rates (CAGR ~32.3%), showing strong demand for QC/optimisation capabilities.

4. Security, Auditability & Compliance

Given the regulatory nature of pharma, security, data privacy, audit trails, and compliance are key. MCP has design features relevant here:

- Access control & permissions: Not all tools/data should be exposed to all agents; MCP supports role-based permissions.

- Secure protocol / authenticated endpoints: The MCP server–client model allows secure communication, ensuring external data/services are validated and accessed securely.

- Audit logs / metadata: Since AI agents + tools + data sources are connected, MCP allows logging of what data/tools were used, when, with what inputs, what outputs. Important for regulatory inspection.

- Traceability in Supply Chain: As in the case study “MCP Repairs”, where inventory anomalies and supply chain compliance (DSCSA in the USA) were addressed using IoT + MCP resource pools + audit APIs. Real improvements: inventory accuracy improved to 99.8%; audit compliance 40% faster; zero temperature excursions for biologics storage.

5. Scalability, Reuse, and Lifecycle Management

Pharma operations often involve many sites, many assets, multiple types of equipment, and a lot of variation in processes. Hence, modularity, scalability, and reuse are very valuable. MCP helps:

- Standardization reduces custom work: Once you build an MCP server exposing data from a type of sensor or system, that protocol can be reused across other assets or other sites. Less engineering overhead.

- Easier extension / upgrades: Introducing new sensors, new analytic tools, or modifying digital twin models becomes easier when connections are via a standardized protocol rather than many one-off integrations.

- Versioning and model lifecycle: When digital twin models are updated, or AI models are retrained, MCP enables controlled rollouts, versioned endpoints, and consistent data schema / context handling.

- Cross-site / global pharma plants: For global pharma manufacturers with many plants, MCP can provide a common integration layer so AI + DT tools are interoperable across locations.

Case Studies & Examples

Here are more detailed cases (real or near-real) to illustrate how MCP + AI + Digital Twins work in pharma / life sciences.

- MCP Repairs — Pharma Distributor / Inventory & Regulatory Case

- Objective: Resolve inventory anomalies (serialized product mismatches, temperature excursions, etc.), ensure compliance (e.g., DSCSA), and enable real-time monitoring.

- Solution: IoT sensors + resource pools via MCP; audit APIs that comply with DSCSA; real-time anomaly detection and automated repair workflows.

- Results:

- Inventory accuracy rose to ~99.8% from ~92% before.

- Audit compliance processes sped up by ~40%.

- Zero temperature excursions in biologics storage.

- Manual reconciliation reduced by ~30%.

- Singapore / CARES + A*STAR Platform for Manufacturing

- In 2025, a project in Singapore (CARES + A*STAR) developed a digital twin platform that uses AI plus real-time plant data to enhance fault detection, system monitoring, predictive maintenance in pharma manufacturing.

- This is more in the development/commercialisation stage, but shows integration of DT + AI + real-time data.

- Efficiency Gains via Digital Twins more broadly

- Digital twins reduce downtime by ~20-25% (McKinsey / industry studies) by allowing earlier detection of failures and faster resolution.

- Time to market for new drugs can be cut 10-15% due to virtual simulation of manufacturing scale-up, QC modelling, etc.

- Market Data

- Digital Twins in Healthcare projected to grow from ~USD 2.81B in 2025 to ~USD 11.37B by 2030 (CAGR ~32.3%)

- Predictive maintenance + digital twin segment: ~USD 6.9B in 2024 to ~USD 40.2B by 2030.

How MCP Specifically Bridges the Gap: Integrative Role

To summarise, here are the concrete roles MCP plays in bringing AI + Digital Twins together for predictive maintenance & quality control in pharma:

Challenges, Risks, and Things to Watch

While MCP offers a promising architecture, there are still many challenges, especially in pharma, and in deploying AI + Digital Twins.

- Regulatory Validation & Acceptance

- For pharma, any model or system that influences production or QC must be validated (software validation, model validation). Digital twin outputs must be reliable, explainable. Using AI + MCP introduces new layers that regulators may scrutinize: how is data handled, what tools are exposed, are there failure modes, etc.

- Data Quality, Sensor Calibration, Noise, etc.

- No matter how good your protocol or integration is, if sensors give noisy or incorrect readings, or if metadata (like calibration or maintenance history) is incomplete or wrong, predictions or simulations will be wrong. Ensuring high data quality is essential.

- Cybersecurity and Data Privacy

- Particularly when connecting data sources, tools, and possibly cloud services, through MCP. Need to ensure that data is protected, that access permissions are strict, vulnerabilities in endpoints are controlled. Threats like malicious tools, exfiltration, etc.

- Complexity & Skill Requirements

- Need multidisciplinary teams: domain experts (process, biotech, chemistry), data scientists, AI engineers, software engineers, validation / regulatory professionals. Some facilities may lack the right talent.

- MCP Maturity

- MCP is still new. While it has specification, open-source implementations, community interest, there are open questions: performance at scale; latency for real-time/data streaming; tool limitations; standardization of metadata schemas in pharma; and regulatory acceptance.

- Cost & Return on Investment (ROI)

- Initial investment in sensors, instrumentation, digital twin model development, AI model training, integration, validation, etc. The ROI must justify costs; not all assets/processes may give sufficient return.

- Interoperability Standards Beyond MCP

- Pharma already has many standards (FDA, EMA guidelines, ASTM, ISO, GMP, DSCSA, etc.). Also, other data standards (e.g., batch record systems, analytical lab info, ERP). MCP alignment with these, mapping schemas, acceptable validation frameworks, etc., is required.

Forward Outlook: Where MCP + AI + Digital Twins Are Headed in Pharma

Given current trends and technological progress, here are anticipated developments in the next few years, and how MCP is likely to shape them.

- Broader Adoption of MCP in Pharma Platforms

- As MCP becomes more stable, open-source MCP server/client kits adapted for pharma (with domain-specific resource definitions) will emerge. Common modules might appear for bioreactor monitoring, environmental monitoring, QC analytics, EHS tools, etc.

- Pharma companies may begin to demand MCP compatibility from vendors of digital twin platforms, AI analytics tools, and automation systems.

- Regulatory Guidance / Frameworks around AI + Digital Twins + Integration

- Regulatory authorities (FDA, EMA, etc.) are likely to issue clearer guidance on the use of AI / digital twins for QC, predictive maintenance, and requirements for context, traceability, and model validation.

- Possibly audit of MCP-mediated systems: ensuring the protocol meets requirements (secure, traceable, logged, etc.).

- More Real-Time, Continuous Quality Control (CQC)

- With better integration (via protocols like MCP), digital twins + AI can shift QC more towards continuous monitoring rather than batch end QC. Deviation detection, process drift detection, and adaptive control could become more mainstream.

- Digital Twin as a Service (DTaaS) & Edge / On-Device Processing

- For latency and security, some digital twin + AI processing will move toward the edge (on-site / on-device), with MCP servers located locally or in hybrid models, to reduce data transmission delays. Also useful when internet connectivity / cloud use is restricted.

- Increased Use of Simulations & ‘What-if’ Virtual Scenarios in Scaling Up/Transfer

- Use of digital twins + AI to simulate scaling from lab to pilot to full scale, technology transfer between plants, or changing suppliers, will increase. MCP offers the possibility to plug in supplier data, environment data, etc., to make simulations more realistic.

- AI / ML Advances: Explainability, Hybrid Models, Self-Updating Twins

- AI models with better interpretability, being accepted by domain experts, will be more widely used. Hybrid models combining physics‐based modelling + ML. Twins that adapt or self‐correct based on new data. MCP helps in enabling these, since tools and data flows can adapt.

- Economic & Sustainability Pressures Driving Optimization

- Pharma companies are increasingly under pressure to reduce waste, energy use, and environmental footprint. Digital twins + AI + MCP can help track material consumption, equipment efficiency, waste, and environmental parameters, leading to sustainability improvements.

Suggested Architecture / Implementation Roadmap

To make this more actionable, here is a suggested high-level architecture and roadmap for a pharma company wanting to leverage MCP + AI + Digital Twins for predictive maintenance & QC.

Architecture Components

- Sensors & IoT Layer: Equipment, environmental sensors; real-time data streams; appropriate calibrations; redundant sensors where needed.

- Edge / Local Data Aggregation: Local data collectors / historians; possibly edge computing for preprocessing, filtering.

- MCP Server(s): Expose required data sources/tools. Secure, with defined roles/permissions. Capable of both streaming and request/response endpoints. Log interactions.

- Digital Twin Models: Virtual replicas of equipment / process; include physics‐based models, possibly hybrid models; simulating expected behaviour, deviation thresholds.

- AI / ML Models / Analytics Layer: Anomaly detection, predictive failure models, QC prediction, process drift, etc.

- Tool / Function Library: QC statistical tools, predictive failure calculators, control chart tools, root‐cause analysis modules, what-if scenario simulators, etc., ideally exposed via MCP.

- Visualization & Dashboarding: For operators, QC staff, maintenance teams, and management.

- Compliance / Audit / Validation Module: Logs, version control, model validation records, regulatory documentation.

Roadmap Steps

- Assess Current State

- Map existing data sources, tools, and sensor infrastructure. Where are silos, gaps? What are the current failure causes, downtime, and quality deviations?

- Define Use Cases

- Identify pilot assets / processes where predictive maintenance + QC gains are most likely to deliver ROI. E.g., a critical bioreactor, a filling line, etc.

- Define Data & Metadata Requirements

- What sensor data, environmental data, maintenance history, etc., is required? What metadata (timestamps, location, calibration, etc.)? Define data schema.

- Design MCP Integration

- Select or build MCP server(s) to expose data sources and tools. Define clients (AI agents, digital twin modules) that consume them. Implement authentication, access control, and logging.

- Develop Digital Twin + AI Models.

- Build or adapt digital twin models for the pilot assets; gather historical data; train predictive models; ensure explainability.

- Validation & Regulatory Review

- Validate model predictions, ensure accuracy, test failure scenarios, and document everything. Engage quality/regulatory teams early to ensure acceptability.

- Deploy, Monitor, Measure

- Run the pilot; monitor predictive maintenance results (downtime, failures avoided), QC deviation rates; measure ROI, cost savings, compliance improvements.

- Scale Up

- Based on pilot success, roll out to more assets, more sites. Reuse MCP servers/resources/tools; refine models and twins.

- Continuous Improvement

- Include feedback loops: when predictions fail, feed data back; monitor drift; maintain model retraining; update twins; monitor regulatory changes.

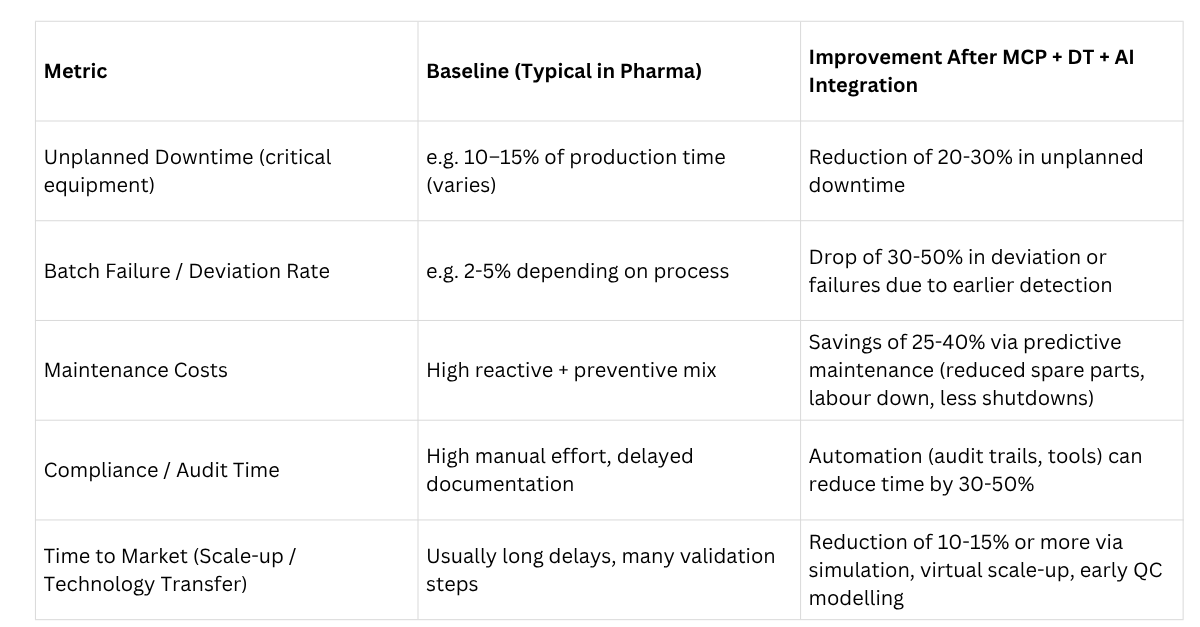

Example Metrics & What Improvement Might Look Like

To help understand what kind of improvements are realistic, here are sample metrics and expected gains from digital twin + AI + MCP integration, based on industry data + extrapolation:

These are estimates; actual numbers depend heavily on the quality of implementation, the criticality of the assets, the quality of the data infrastructure, the regulatory environment, and other factors.

How MCP Impacts Quality Control and Predictive Maintenance Specifically in Life Sciences / Pharma

Here are some domain-specific points.

- Batch & Continuous Manufacturing: For pharma transitioning from batch to continuous manufacturing (or already operating continuously), parameters drift more subtly; continuous QC is needed. Digital twins + AI with MCP make continuous monitoring and corrective control more feasible.

- Biopharma / Bioprocessing: Bioreactors are sensitive to parameters like dissolved oxygen (DO), pH, temperature, agitation, feed rates. Off-spec growth, yield, contamination can result if parameters drift. Using MCP, real-time environmental sensors, feed sensors, online analytical instruments exposures can be connected; digital twin can forecast biomass growth, metabolite accumulation, etc.; AI can model deviations and suggest corrective actions.

- Fill/Finish / Aseptic Processing: Critical operations; any failure is very expensive (waste, regulatory, safety). Use of digital twins to simulate line performance, fluid dynamics, sterile conditions; AI anomaly detection of pressures, contamination risk; MCP ensures all needed data (pressure, airflow, temperature, operator logs, etc.) are available and tools to analyze are plugged in.

- Analytical QC Laboratories: Even QC labs can benefit: integrating lab instrument data, historical QC results, environmental conditions, reagent logs; digital twin of QC process skill; AI to detect drift, instrument errors; MCP enabling lab instrument outputs to be exposed directly, metadata preserved.

- Supply Chain / Storage: For drugs requiring cold chain, biologics, and vaccines, temperature deviations can destroy the product. MCP enables real-time temperature sensors, environmental sensors, storage logs, etc., connected; AI + DT can detect, predict the risk of excursions; QC can be preserved.

Potential Pitfalls / What to Validate

- Overfitting / False Positives in Predictive Models: With many sensors, many parameters, AI models may detect patterns that are spurious; they need careful validation, thresholding, and false alert suppression.

- Model Drift Over Time: Processes change, equipment ages, new batches, and new raw materials. Digital twins / AI models must be updated or adapted; MCP must support versioning and model monitoring.

- Latency / Real-Time Constraints: For some predictive maintenance or QC tasks, latency matters. If data takes too long to reach AI models (e.g., via cloud), corrections may be too late. Edge/Local MCP servers or a hybrid architecture may be needed.

- Regulatory / Validation Overhead: Each model, tool, and integration must be validated, especially if influencing batch release. That takes time and documentation; it must be planned.

- Security Risks: Exposed endpoints, possibly via MCP, can increase attack surfaces; need strong security (authentication, encryption, vetting of tools, audits).

Recent Developments & Research

Here are some of the most recent publications or announcements relevant to MCP + Digital Twin + AI in pharma or closely analogous domains.

- “Beyond Formal Semantics for Capabilities and Skills: Model Context Protocol in Manufacturing” (Silva et al., 2025) demonstrates MCP usage in a lab manufacturing setup, enabling LLM agents to invoke resource functions, handling constraints. Shows that MCP can simplify the representation of capabilities/skills without heavy manual semantic modeling.

- “An Agentic Model Context Protocol Framework for Medical Concept Standardization” — Use of MCP to map medical terms to standard concepts (OMOP CDM), improving efficiency/accuracy, preventing hallucinations by connecting LLMs to external lexical resources / APIs. This is relevant because medical / QC data often uses many controlled vocabularies and ontologies.

- Digital twin platforms in Singapore (Cambridge / A*STAR) being commercialized via spin-off companies; using DT + AI + real time plant data; for fault detection, system monitoring, predictive maintenance.

- Market data: as earlier, shows sharp growth in pharma 4.0 / digital twins / predictive maintenance markets.

Practical Recommendations: What a Pharma Manufacturer Should Do to Leverage MCP + AI + Digital Twin

- Start with a Well-Defined Pilot

- Choose a high value, high risk / weak point in operations (e.g., a machine with frequent breakdowns, or a QC lab with drift issues).

- Ensure data availability for that asset: sensors, historical data, QC records, etc.

- Form a Cross-Functional Team

- Include operations, process engineering, QA/QC, instrumentation, data science, IT/security, and regulatory.

- Establish Data Schema & Metadata Standards Early

- Define what context matters: sensor metadata (location, calibration, maintenance), batch metadata, and environmental metadata.

- Decide on standard units, formats, sampling frequencies, etc.

- Implement MCP Infrastructure

- Deploy MCP server(s) that expose necessary data/tools. Ensure robust security, role-based access.

- Choose or build clients (AI agents/digital twin modules) that can consume these resources reliably.

- Develop Digital Twin + AI Models.

- Use domain knowledge + historical data to build twin models; validate them carefully.

- Train AI predictive maintenance models, QC anomaly detection, etc.

- Validation, Regulatory & QA Review

- Validate the model outputs, twin behaviour, and decision boundaries.

- Prepare documentation, SOPs, validation records, and exceptional case handling.

- Monitoring, Feedback & Improvement

- Continuously monitor the performance (prediction accuracy, false alarms, maintenance savings, QC improvements).

- Refine models/twins, retrain as needed.

- Scale & Institutionalize

- Once the pilot shows value, extend the system to other equipment/processes/sites.

- Embed MCP / DT / AI in standard practice.

- Maintain Governance & Security

- Governance over who can access which data/tools, oversight, and audits.

- Ensure cybersecurity measures are in place; periodic review.

Conclusion

Integrating AI with Digital Twins for predictive maintenance and quality control in pharma / life sciences has the potential to deliver substantial improvements: reducing downtime, increasing batch success, improving regulatory compliance, cutting costs, accelerating time to market, and improving sustainability.

The Model Context Protocol (MCP) is a recent open standard that acts as a linchpin in this integration. By offering a standardized, secure, context-aware way for AI systems to access external data sources, tools, and services, MCP helps overcome many of the traditional integration challenges: silos, inconsistent metadata/context, lack of tool reuse, and regulatory traceability.

While still emerging, MCP has already been applied in supply chain, medical ontology, and lab/manufacturing scale cases. Market data shows strong growth in related segments (digital twins, predictive maintenance, pharma 4.0). For pharma manufacturers, starting with a well-designed pilot, ensuring data/metadata quality, engaging regulatory authorities early, implementing secure and audited MCP infrastructure, and measuring outcomes are keys to success. Atlas Compliance also extends this vision — integrating with real-time supply chain monitoring to ensure transparency, compliance traceability, and faster quality decisions across global pharma operations.